Monitoring Proxmox VE with Grafana Stack on LXC

Build enterprise-grade Proxmox monitoring with Grafana, Prometheus & Telegram alerts. Step-by-step LXC setup with production-ready configs.

I learned this lesson the hard way: discovering your Proxmox host is melting down shouldn’t be a surprise. Trust me, finding out your storage is 98% full at 2am is not fun.

This guide walks you through setting up proper monitoring (Grafana + Prometheus + AlertManager) in an LXC container that’ll ping you on Telegram before things catch fire. No agents to install on the host, just a clean API-based setup.

⏱️ Time to complete: 45-60 minutes (hands-on, grab coffee)

Why Bother Monitoring Proxmox?

Look, whether you’re running a homelab or production infrastructure, flying blind is asking for trouble. I’ve seen way too many “unexpected” crashes that would’ve been totally preventable with basic monitoring.

Here’s what actually happens when you don’t monitor:

The silent killers:

- Disk failure / ZFS degraded (discovered when it’s too late)

- Root filesystem at 100% (good luck SSH’ing in)

- RAM/swap exhausted (everything grinds to a halt)

- Some VM eating 100% CPU (but which one?)

- Backups silently failing (for weeks… ask me how I know)

- Node goes down after an update (at 3am, naturally)

- Crypto-miner hijacked a container (yes, this happens)

The reality: These issues don’t announce themselves. Your storage doesn’t email you when it hits 90%. Your node doesn’t text you before it overheats. You find out when something breaks.

That’s why we monitor.

Design the monitoring system

graph TB

subgraph proxmox["Proxmox VE Host<br/>192.168.100.4:8006"]

vm1[VM/LXC]

vm2[VM/LXC]

vm3[VM/LXC]

vmore[...]

end

subgraph grafana_stack["Grafana-Stack LXC<br/>192.168.100.40"]

pve[PVE Exporter :9221<br/>Pulls metrics from Proxmox API]

prometheus[Prometheus :9090<br/>- Scrapes metrics 15s interval<br/>- Stores data 15-day retention<br/>- Evaluates alert rules]

grafana[Grafana :3000<br/>Dashboards]

alertmanager[AlertManager :9093<br/>Notifications]

end

slack[Slack<br/>Critical]

telegram[Telegram<br/>Operational]

proxmox -->|HTTPS API<br/>read-only| pve

pve -->|metrics| prometheus

prometheus -->|queries| grafana

prometheus -->|alerts| alertmanager

alertmanager -->|critical alerts| slack

alertmanager -->|operational alerts| telegram

style proxmox fill:#e1f5ff,stroke:#0288d1,stroke-width:2px

style grafana_stack fill:#f3e5f5,stroke:#7b1fa2,stroke-width:2px

style slack fill:#fff3e0,stroke:#ef6c00,stroke-width:2px

style telegram fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

style pve fill:#fff9c4,stroke:#f9a825

style prometheus fill:#ffebee,stroke:#c62828

style grafana fill:#e3f2fd,stroke:#1565c0

style alertmanager fill:#fce4ec,stroke:#c2185b

How This Setup Works

I went with an LXC container instead of a full VM because why waste 4GB of RAM on another kernel? Here’s the architecture:

The Proxmox side: Uses a read-only API token (so even if someone somehow gets access, they can’t break anything). No agents to install, no kernel modules - just hit the HTTPS API on port 8006.

The monitoring LXC (192.168.100.40):

- pve-exporter - Queries Proxmox API every 15 seconds, grabs metrics for nodes, VMs, ZFS pools, backups, everything

- Prometheus - Scrapes those metrics, keeps 15 days of history, checks if anything’s on fire

- Grafana - Makes it all pretty with ready-made dashboards

- AlertManager - Sends you notifications when things go sideways:

- Critical stuff (node down, disk failure) → Slack

- Operational warnings (high load, backup failed) → Telegram

What’s Good (and What’s Not)

Why I like this setup:

- Simple - Just three services in one LXC

- Lightweight - Uses maybe 2GB RAM total

- Safe - Read-only API token can’t break anything

- Free - Zero licensing costs

- Beautiful dashboards out of the box

What could be better:

- Single point of failure (the LXC goes down, monitoring’s gone - though you can enable HA)

- Limited to 15 days history by default (fine for most cases, but you can extend it)

- No built-in long-term storage (for that you’d need Thanos or VictoriaMetrics)

- Exposed to your network (put it behind a reverse proxy if you’re paranoid)

For a homelab or small production setup? This is plenty. If you’re running 50+ nodes, you’ll want something beefier.

Prerequisites

This is exactly based on my local infrastructure:

- Proxmox VE 9.1.1 installed and running

- Debian 13 LXC template downloaded in Proxmox

- Basic understanding of Linux commands

- Telegram account (for alerts)

- Slack workspace (optional, for critical alerts)

Step 1: Create LXC Container

Create an unprivileged Debian 13 LXC container for the Grafana stack.

LXC containers are the optimal choice for this monitoring stack, offering significant resource efficiency without sacrificing functionality.

Key Benefits:

- Lower overhead: LXC shares the host kernel, consuming ~50-70% less RAM than a VM (8GB LXC vs 12-14GB VM)

- Faster performance: Near-native CPU performance without virtualization overhead

- Quick startup: Container boots in 2-3 seconds vs 30-60 seconds for a VM

- Smaller disk footprint: 50GB LXC vs 80-100GB VM (no separate OS kernel/modules)

- Easy snapshots: Instant container snapshots for backup/rollback

No Feature Limitations:

- All Grafana stack components (Grafana, Prometheus, Loki, AlertManager) run perfectly in LXC

- Network services work identically to VMs

- No Docker required (native package installations)

- Full access to Proxmox API for metrics collection

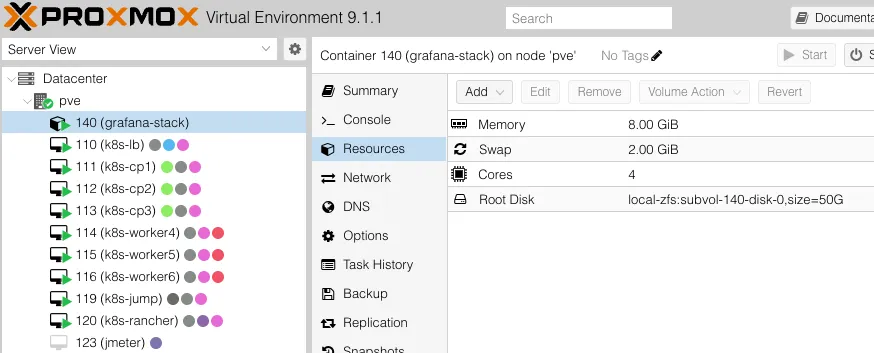

Specifications:

- VMID: 140

- Hostname: grafana-stack

- Template: Debian 13 standard

- CPU: 4 cores

- RAM: 8GB

- Disk: 50GB (local-zfs)

- Network: Static IP 192.168.100.40/24

# On Proxmox host

pct create 140 local:vztmpl/debian-13-standard_13.1-2_amd64.tar.zst \

--hostname grafana-stack \ # Container name

--cores 4 \

--memory 8192 \

--swap 2048 \

--rootfs local-zfs:50 \

--net0 name=eth0,bridge=vmbr0,ip=192.168.100.40/24,gw=192.168.100.1 \

--nameserver 8.8.8.8 \

--unprivileged 1 \ # Safer unprivileged container

--features nesting=0 \ # Nesting OFF - no docker will be installed in this container - container in a container

--sshkeys /root/.ssh/your-key \ # injects your workstation keys

--start 1 # Start container after createdHere is what you will have when check in Proxmox UI:

Step 2: Configure LXC Network

LXC containers don’t use cloud-init, so network configuration must be done manually.

# Access container console via Proxmox UI or:

pct enter 140

# Configure network

cat > /etc/network/interfaces << 'EOF'

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 192.168.100.40

netmask 255.255.255.0

gateway 192.168.100.1

dns-nameservers 8.8.8.8 8.8.4.4

EOF

# Restart networking

systemctl restart networking

# Verify

ip addr show eth0

ping -c 3 8.8.8.8Step 3: Install Grafana Stack

Install all monitoring components using the automated bash script. You can download the grafana-stack-setup.sh script.

# Update system

apt update && apt upgrade -y

# Install dependencies

apt install -y apt-transport-https wget curl gnupg2 ca-certificates \

python3 python3-pip unzip

# Download installation script

wget https://gist.githubusercontent.com/sule9985/fabf9e4ebcd9bd93019bd0a5ada5d827/raw/8c7c3f8bf5aa28bba4585142ec876a001b18f63a/grafana-stack-setup.sh

chmod +x grafana-stack-setup.sh

# Run installation

./grafana-stack-setup.shThe script installs:

- Grafana 12.3.0 - Visualization platform

- Prometheus 3.7.3 - Metrics collection and storage

- Loki 3.6.0 - Log aggregation

- AlertManager 0.29.0 - Alert routing and notifications

- Proxmox PVE Exporter 3.5.5 - Proxmox metrics collector

Installation takes ~5-10 minutes and you can see the good results in Terminal like this:

=============================================

VERIFYING INSTALLATION

=============================================

[STEP] Checking service status...

✓ grafana-server: running

✓ prometheus: running

✓ loki: running

✓ alertmanager: running

! prometheus-pve-exporter: not configured

[STEP] Checking network connectivity...

✓ Port 3000 (Grafana): listening

✓ Port 9090 (Prometheus): listening

✓ Port 3100 (Loki): listening

✓ Port 9093 (AlertManager): listening

[SUCCESS] All services verified successfully!

[SUCCESS] Installation completed successfully in 53 seconds!Step 4: Create Proxmox Monitoring User

Create a read-only user on Proxmox for the PVE Exporter to collect metrics.

# SSH to Proxmox host

ssh root@192.168.100.4

# Create monitoring user

pveum user add grafana-user@pve --comment "Grafana monitoring user"

# Assign read-only permissions

pveum acl modify / --user grafana-user@pve --role PVEAuditor

# Create API token

pveum user token add grafana-user@pve grafana-token --privsep 0

# Save the token output!

# Example: 8a7b6c5d-1234-5678-90ab-cdef12345678Important: Save the full token value - it’s only shown once!

Step 5: Configure PVE Exporter

Now we tell the exporter how to talk to Proxmox. SSH into your LXC:

# On grafana-stack LXC

ssh -i PATH_TO_YOUR_KEY root@192.168.100.40

# Edit PVE exporter configuration

nano /etc/prometheus-pve-exporter/pve.ymlHere’s the config - pay attention to the token part, this tripped me up the first time:

default:

user: grafana-user@pve

# IMPORTANT: Create a read-only user on Proxmox for monitoring

# On Proxmox host:

# Then add the token here:

token_name: 'grafana-token'

token_value: 'TOKEN_VALUE' # ⚠️ This only shows ONCE when you create it. If you lost it, make a new one.

# OR use password:

# password: "CHANGE_ME"

verify_ssl: false # Self-signed cert? Set this to false or you'll get TLS errors

# Target Proxmox hosts

pve1:

user: grafana-user@pve

token_name: 'grafana-token'

token_value: 'TOKEN_VALUE'

verify_ssl: false

target: https://192.168.100.4:8006 # Your Proxmox host IP + port 8006 (HTTPS, not HTTP!)Pro tip: The token only appears once when you create it in Proxmox. If you closed the window without copying it… yeah, you’ll need to create a new one. Ask me how I know.

Fire it up:

# Start service

systemctl start prometheus-pve-exporter

# Verify it's actually running (not just "enabled")

root@grafana-stack:~# systemctl status prometheus-pve-exporter.service

● prometheus-pve-exporter.service - Prometheus Proxmox VE Exporter

Loaded: loaded (/etc/systemd/system/prometheus-pve-exporter.service; enabled; preset: enabled)

Active: active (running) since Sun 2025-11-23 11:22:06 +07; 4 days ago

Invocation: 1c35a29336b346e8b553b74a4d8fc533

Docs: https://github.com/prometheus-pve/prometheus-pve-exporter

Main PID: 10509 (pve_exporter)

Tasks: 4 (limit: 75893)

Memory: 44.4M (peak: 45.2M)

CPU: 27min 52.526s

CGroup: /system.slice/prometheus-pve-exporter.service

├─10509 /usr/bin/python3 /usr/local/bin/pve_exporter --config.file=/etc/prometheus-pve-exporter/pve.yml --web.listen-address=0.0.0.0:9221

└─10550 /usr/bin/python3 /usr/local/bin/pve_exporter --config.file=/etc/prometheus-pve-exporter/pve.yml --web.listen-address=0.0.0.0:9221See “active (running)”? Good. If you see “failed” or errors about TLS, check your verify_ssl setting and make sure the Proxmox IP is correct.

Step 6: Configure Prometheus Scraping

Now we tell Prometheus where to grab the metrics from:

# Edit Prometheus config

nano /etc/prometheus/prometheus.ymlAdd this job config to your scrape_configs section:

scrape_configs:

# ──────────────────────────────────────────────────────────────

# Proxmox VE monitoring via pve-exporter (runs inside the LXC)

# ──────────────────────────────────────────────────────────────

- job_name: 'proxmox' # Friendly name shown in Prometheus/Grafana

metrics_path: '/pve' # Endpoint where pve-exporter serves Proxmox metrics

params:

target:

['192.168.100.4:8006'] # Your Proxmox node (or cluster) + GUI port

# Supports multiple nodes: ['node1:8006','node2:8006']

static_configs:

- targets: ['localhost:9221'] # Where pve-exporter is listening inside this LXC

labels:

service: 'proxmox-pve' # Custom label – helps filtering in Grafana

instance: 'pve-host' # Logical name for your cluster/nodeWhat’s happening here: Prometheus scrapes localhost:9221/pve (the exporter), which then queries your Proxmox API at 192.168.100.4:8006. It’s a proxy setup - Prometheus never talks directly to Proxmox.

Kick Prometheus to pick up the new config:

# Restart Prometheus

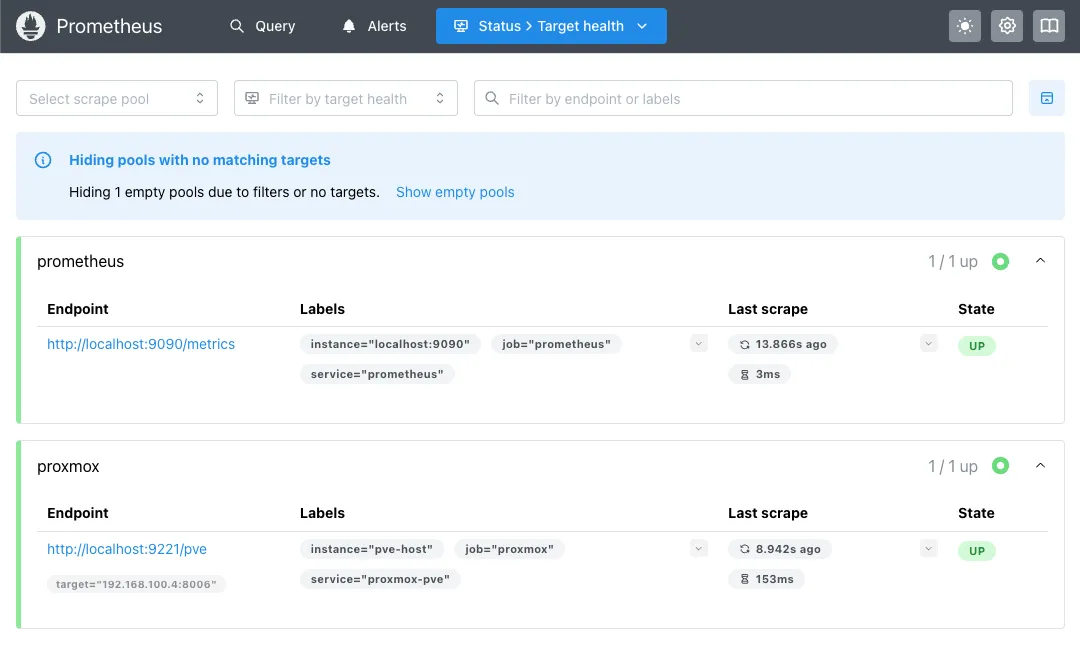

systemctl restart prometheusSanity check time. Open http://192.168.100.40:9090/targets in your browser. You should see your proxmox target showing UP in green:

If it’s DOWN, check the exporter service and your firewall rules. Don’t skip this step - if Prometheus can’t reach the exporter, nothing else will work.

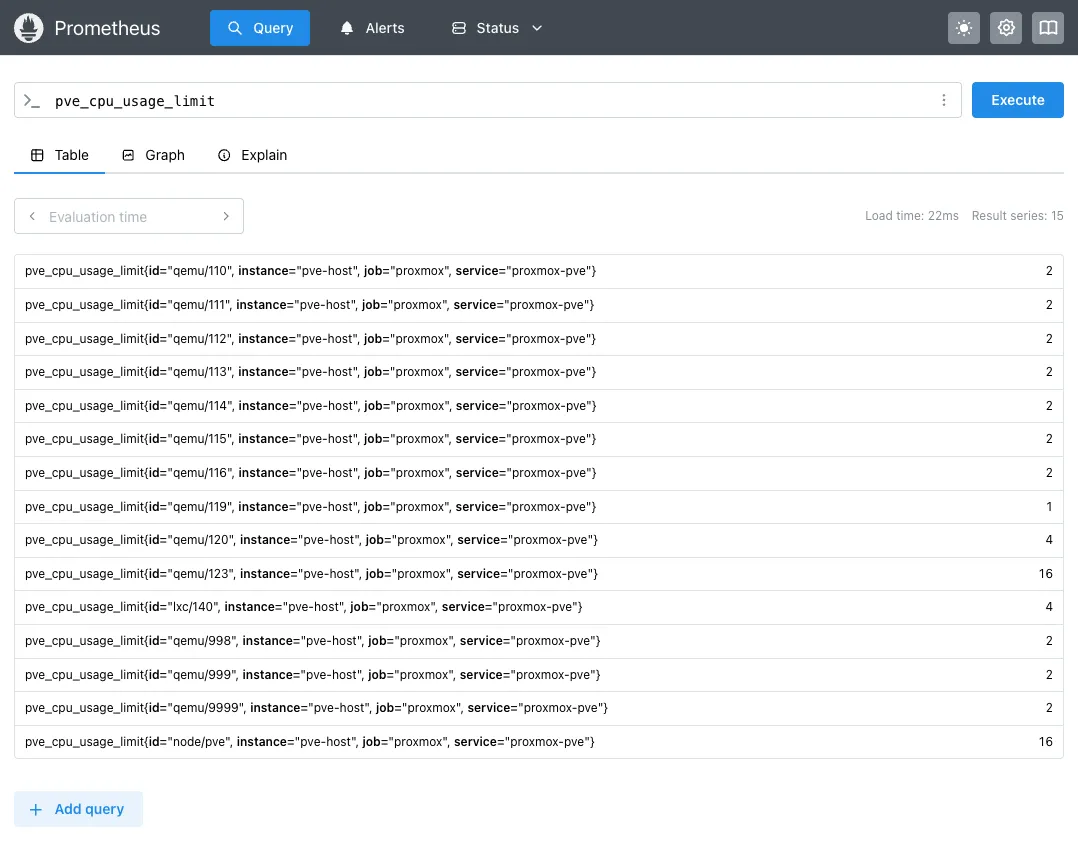

One more quick test: Click Graph in the Prometheus UI, type pve_cpu_usage_limit in the query box, hit Execute. You should see actual CPU metrics:

Seeing numbers? Perfect. Your Proxmox API is talking to the exporter, and Prometheus is scraping it correctly.

Quick inventory check (what we’ve got so far):

- Proxmox host (v9.1.1) - Read-only user

grafana-user@pvewith API token - Monitoring LXC (192.168.100.40) running:

- Grafana (v12.3.0)

- Prometheus (v3.7.3) + PVE Exporter (v3.5.5)

- AlertManager (v0.29.0)

- Loki (v3.6.0)

Zero agents on the Proxmox host. Everything queries the API remotely.

- Change default password: Immediately change Grafana’s default

admin/admincredentials on first login - Configure firewall: Restrict access to ports 3000 (Grafana), 9090 (Prometheus), 9093 (AlertManager) to your internal network only - Use reverse proxy: For external access, deploy a reverse proxy (Nginx/Traefik) with TLS and authentication - Update API token permissions: The Proxmox API token has read-only access (PVEAuditor role), limiting exposure if compromised

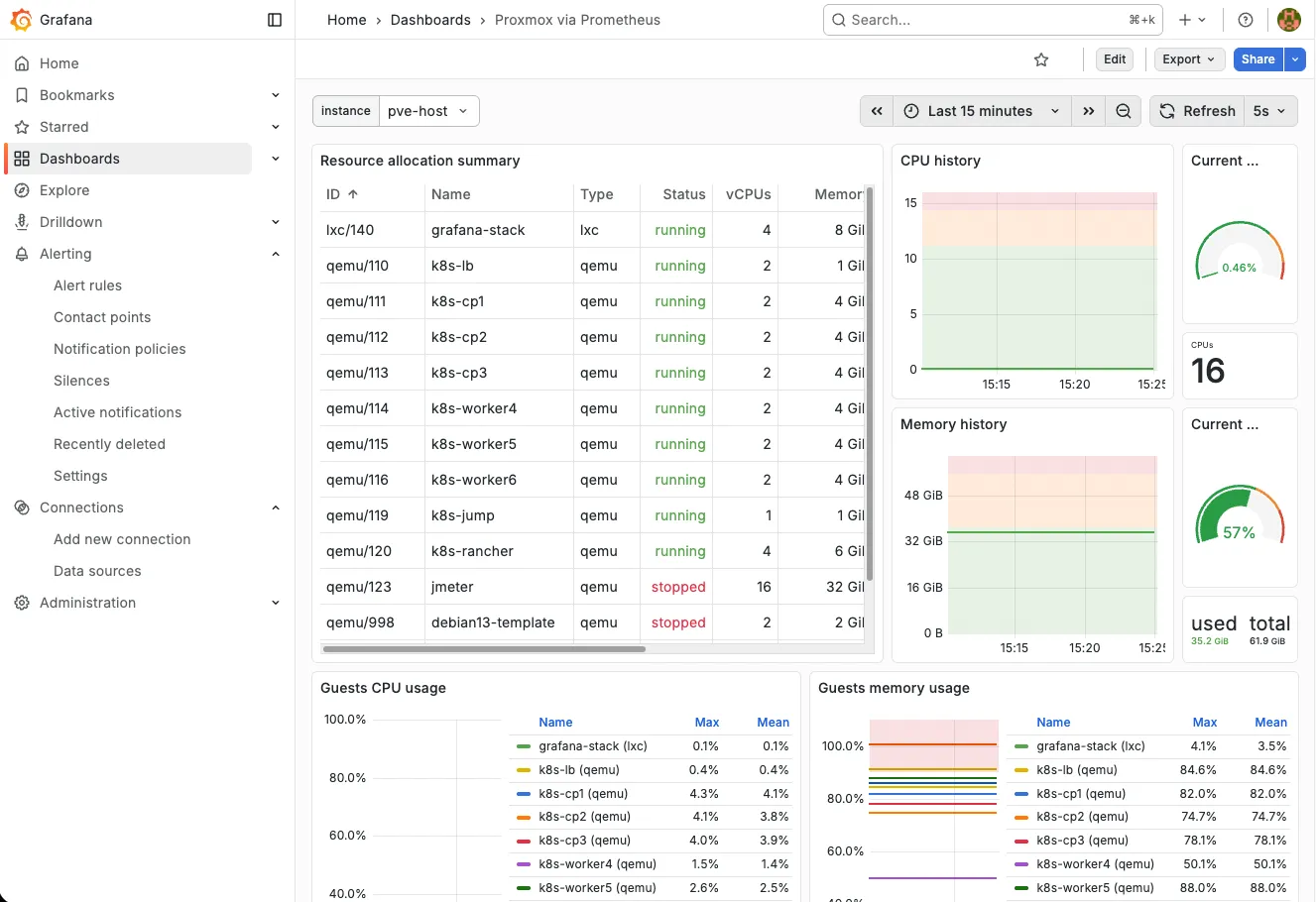

Step 7: Import Grafana Dashboard

Time for the fun part - actually seeing your data. Head to Grafana:

- Open

http://192.168.100.40:3000in your browser - Login with

admin/admin(seriously, change this password on first login) - Navigate to Dashboards → New → Import

- Enter Dashboard ID:

10347(the official Proxmox dashboard - it’s excellent) - Click Load

- Select Prometheus as the datasource

- Click Import

Boom. You should see something like this:

Step 8: Set Up Alerting

Pretty dashboards are nice, but what you really need is something to wake you up at 3am when your node’s on fire. Let’s set up notifications via Telegram (and optionally Slack).

Step 8a: Create Notification Channels

Telegram Bot (Recommended)

This takes like 2 minutes:

- Open Telegram, search for

@BotFather - Send

/newbot - Pick a name and username for your bot

- Save the Bot Token (you’ll need this in a minute)

- Start a chat with your new bot (send

/start) - Get your Chat ID by visiting:

https://api.telegram.org/bot<YOUR_TOKEN>/getUpdates- Look for

"chat":{"id":123456789}in the JSON response - That number is your Chat ID

- Look for

Pro tip: Keep this browser tab open. You’ll paste both values into AlertManager config shortly.

Slack Webhook (Optional, but nice for team alerts)

If you’ve got a team Slack:

- Go to https://api.slack.com/apps

- Create New App → From scratch

- Enable Incoming Webhooks

- Add New Webhook to Workspace

- Pick your channel (e.g.,

#infrastructure-alerts) - Copy the Webhook URL (starts with

https://hooks.slack.com/...)

I use Telegram for “wake me up” alerts and Slack for “FYI the team should know” stuff.

Step 8b: Configure Prometheus Alert Rules

How Alerting Works (The Quick Version)

Alerting has two parts, and mixing them up is where most people get confused:

Prometheus Alert Rules = What fires alerts

- Checks metrics every 30 seconds: “Is CPU > 85%? Is disk > 90%?”

- Adds labels like

severity: criticalornotification_channel: telegram - Sends matching alerts to AlertManager

AlertManager = Where alerts go

- Reads the labels Prometheus sent

- Routes to Telegram, Slack, email, whatever

- Groups similar alerts (so you don’t get 50 spam messages)

- Deduplicates and silences repeats

Why split it up? Because Prometheus is good at math (evaluating metrics), and AlertManager is good at logistics (routing notifications). Keeps things clean.

What we’re building:

- Telegram gets everything (CPU warnings, disk full, node down)

- Slack optional for team notifications

- Two-tier alerts: warning (80-85%) and critical (90-95%)

- Smart suppression - if critical fires, warning shuts up

This setup focuses on monitoring the Proxmox host infrastructure only, not individual VMs/LXCs.

- Why this approach? It simplifies the monitoring stack and reduces complexity

- For VM/LXC monitoring: Deploy dedicated exporters (Node Exporter, application-specific exporters) inside each VM/LXC for more accurate, granular metrics

- Separation of concerns: Host-level monitoring (this guide) + VM-level monitoring (separate exporters) provides better visibility than a single solution trying to do everything

flowchart TD

subgraph Prometheus["📊 Prometheus"]

A[Metrics Collection<br/>PVE Exporter] --> B[Alert Rules Evaluation<br/>Every 30s-1m]

B --> C{Condition<br/>Met?}

end

C -->|"Yes"| D[Send Alert to AlertManager]

C -->|"No"| E[Continue Monitoring]

E --> A

subgraph AlertManager["🔔 AlertManager"]

D --> F[Receive Alerts]

F --> G[Grouping & Deduplication<br/>group_wait: 10s-30s]

G --> H{Route by<br/>Label}

H --> I[Apply Inhibition Rules<br/>Suppress warnings if critical firing]

end

subgraph Examples["Example Alert Conditions"]

J1["CPU > 85% (warning)<br/>label: notification_channel=telegram"]

J2["Storage > 80% (warning)<br/>label: notification_channel=telegram"]

end

subgraph Notifications["📬 Notification Channels"]

K["🔴 Telegram<br/>Operational Alerts<br/>• Host CPU/Memory/Disk<br/>• Storage Alerts<br/>• Repeat every 1-2h"]

L["💬 Slack<br/>(Optional)<br/>"]

end

I -->|"notification_channel:<br/>telegram"| K

I -->|"notification_channel:<br/>slack"| L

style Prometheus fill:#e1f5ff,stroke:#0066cc,stroke-width:2px

style AlertManager fill:#fff4e1,stroke:#ff9900,stroke-width:2px

style Examples fill:#f0f0f0,stroke:#666,stroke-width:1px,stroke-dasharray: 5 5

style Notifications fill:#e8f5e9,stroke:#00aa00,stroke-width:2px

style K fill:#4a90e2,color:#fff,stroke:#2563eb,stroke-width:2px

style L fill:#0088cc,color:#fff,stroke:#0066aa,stroke-width:2px

style C fill:#ffd700,stroke:#ff8800

style H fill:#ffd700,stroke:#ff8800

Alright, let’s create the actual alert rules. SSH into your LXC and edit:

# Create alert rules file

nano /etc/prometheus/rules/proxmox.ymlHere’s the full config. I’ve included the essentials - CPU, memory, disk, storage. You can add more later:

groups:

# ============================================================================

# Group 1: Host Alerts (Critical Infrastructure)

# ============================================================================

- name: proxmox_host_alerts

interval: 30s

rules:

# Proxmox Host Down

- alert: ProxmoxHostDown

expr: pve_up{id="node/pve"} == 0

for: 1m

labels:

severity: critical

component: proxmox

alert_group: host_alerts

notification_channel: telegram

annotations:

summary: '🔴 Proxmox host is down'

description: "Proxmox host 'pve' is unreachable or down for more than 1 minute."

# High CPU Usage

- alert: ProxmoxHighCPU

expr: pve_cpu_usage_ratio{id="node/pve"} > 0.85

for: 5m

labels:

severity: warning

component: proxmox

alert_group: host_alerts

notification_channel: telegram

annotations:

summary: '⚠️ High CPU usage on Proxmox host'

description: 'CPU usage is {{ $value | humanizePercentage }} on Proxmox host (threshold: 85%).'

# Critical CPU Usage

- alert: ProxmoxCriticalCPU

expr: pve_cpu_usage_ratio{id="node/pve"} > 0.95

for: 2m

labels:

severity: critical

component: proxmox

alert_group: host_alerts

notification_channel: telegram

annotations:

summary: '🔴 CRITICAL CPU usage on Proxmox host'

description: 'CPU usage is {{ $value | humanizePercentage }} on Proxmox host (threshold: 95%).'

# High Memory Usage

- alert: ProxmoxHighMemory

expr: (pve_memory_usage_bytes{id="node/pve"} / pve_memory_size_bytes{id="node/pve"}) > 0.85

for: 5m

labels:

severity: warning

component: proxmox

alert_group: host_alerts

notification_channel: telegram

annotations:

summary: '⚠️ High memory usage on Proxmox host'

description: 'Memory usage is {{ $value | humanizePercentage }} on Proxmox host (threshold: 85%).'

# Critical Memory Usage

- alert: ProxmoxCriticalMemory

expr: (pve_memory_usage_bytes{id="node/pve"} / pve_memory_size_bytes{id="node/pve"}) > 0.95

for: 2m

labels:

severity: critical

component: proxmox

alert_group: host_alerts

notification_channel: telegram

annotations:

summary: '🔴 CRITICAL memory usage on Proxmox host'

description: 'Memory usage is {{ $value | humanizePercentage }} on Proxmox host (threshold: 95%).'

# High Disk Usage

- alert: ProxmoxHighDiskUsage

expr: (pve_disk_usage_bytes{id="node/pve"} / pve_disk_size_bytes{id="node/pve"}) > 0.80

for: 10m

labels:

severity: warning

component: proxmox

alert_group: host_alerts

notification_channel: telegram

annotations:

summary: '⚠️ High disk usage on Proxmox host'

description: 'Disk usage is {{ $value | humanizePercentage }} on Proxmox host (threshold: 80%).'

# Critical Disk Usage

- alert: ProxmoxCriticalDiskUsage

expr: (pve_disk_usage_bytes{id="node/pve"} / pve_disk_size_bytes{id="node/pve"}) > 0.90

for: 5m

labels:

severity: critical

component: proxmox

alert_group: host_alerts

notification_channel: telegram

annotations:

summary: '🔴 CRITICAL disk usage on Proxmox host'

description: 'Disk usage is {{ $value | humanizePercentage }} on Proxmox host (threshold: 90%).'

# Group 2: Storage Alerts (Telegram - Operational Alerts)

- name: proxmox_storage_alerts

interval: 1m

rules:

# Storage Pool High Usage

- alert: ProxmoxStorageHighUsage

expr: (pve_disk_usage_bytes{id=~"storage/.*"} / pve_disk_size_bytes{id=~"storage/.*"}) > 0.80

for: 10m

labels:

severity: warning

component: proxmox

alert_group: storage_alerts

notification_channel: telegram

annotations:

summary: '⚠️ High usage on storage {{ $labels.storage }}'

description: "Storage '{{ $labels.storage }}' usage is {{ $value | humanizePercentage }} (threshold: 80%)."

# Storage Pool Critical Usage

- alert: ProxmoxStorageCriticalUsage

expr: (pve_disk_usage_bytes{id=~"storage/.*"} / pve_disk_size_bytes{id=~"storage/.*"}) > 0.90

for: 5m

labels:

severity: critical

component: proxmox

alert_group: storage_alerts

notification_channel: telegram

annotations:

summary: '🔴 CRITICAL usage on storage {{ $labels.storage }}'

description: "Storage '{{ $labels.storage }}' usage is {{ $value | humanizePercentage }} (threshold: 90%)."Step 8c: Configure AlertManager Routing

nano /etc/alertmanager/alertmanager.ymlglobal:

resolve_timeout: 5m

# Routing tree - directs alerts to receivers based on labels

route:

# Default grouping and timing

group_by: ['alertname', 'severity', 'alert_group']

group_wait: 10s # Wait before sending first notification

group_interval: 10s # Wait before sending notifications for new alerts in group

repeat_interval: 12h # Resend notification every 12 hours if still firing

# Default receiver for unmatched alerts

receiver: 'telegram-default'

# Child routes - matched in order, first match wins

routes:

# Route 1: Slack for host_alerts (critical infrastructure)

- match:

notification_channel: slack

receiver: 'slack-channel'

group_wait: 10s

group_interval: 10s

repeat_interval: 1h # Repeat every hour for critical infrastructure

continue: false # Stop matching after this route

# Route 2: Telegram for telegram channel alerts (storage)

- match:

notification_channel: telegram

receiver: 'telegram-operational'

group_wait: 30s

group_interval: 30s

repeat_interval: 2h # Repeat every 2 hours for operational alerts

continue: false

# Notification receivers

receivers:

# Slack receiver for critical infrastructure (host alerts)

- name: 'slack-channel'

slack_configs:

- api_url: 'SLACK_WEBHOOK'

channel: '#alerts-test'

username: 'Prometheus AlertManager'

icon_emoji: ':warning:'

title: '{{ .GroupLabels.alertname }} - {{ .GroupLabels.severity | toUpper }}'

text: |

{{ range .Alerts }}

*Alert:* {{ .Labels.alertname }}

*Severity:* {{ .Labels.severity }}

*Component:* {{ .Labels.component }}

*Summary:* {{ .Annotations.summary }}

*Description:* {{ .Annotations.description }}

{{ end }}

send_resolved: true

# Optional: Mention users for critical alerts

# color: '{{ if eq .Status "firing" }}danger{{ else }}good{{ end }}'

# Telegram receiver for operational alerts (storage)

- name: 'telegram-operational'

telegram_configs:

- bot_token: 'BOT_TOKEN'

chat_id: CHAT_ID_NUMBERS

parse_mode: 'HTML'

message: |

{{ range .Alerts }}

<b>{{ .Labels.severity | toUpper }}: {{ .Labels.alertname }}</b>

{{ .Annotations.summary }}

<b>Details:</b>

{{ .Annotations.description }}

<b>Component:</b> {{ .Labels.component }}

<b>Group:</b> {{ .Labels.alert_group }}

<b>Status:</b> {{ .Status }}

{{ end }}

send_resolved: true

# Default Telegram receiver (fallback)

- name: 'telegram-default'

telegram_configs:

- bot_token: 'BOT_TOKEN'

chat_id: CHAT_ID_NUMBERS

parse_mode: 'HTML'

message: |

{{ range .Alerts }}

<b>{{ .Labels.severity | toUpper }}: {{ .Labels.alertname }}</b>

{{ .Annotations.summary }}

{{ .Annotations.description }}

<b>Component:</b> {{ .Labels.component }}

{{ end }}

send_resolved: true

# Inhibition rules - suppress alerts based on other alerts

inhibit_rules:

# If critical alert is firing, suppress warning alerts for same component

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['component', 'alertname']Before you reload anything, validate your configs. Trust me on this - a typo will break everything:

# Validate configs (do this FIRST!)

promtool check rules /etc/prometheus/rules/proxmox.yml

amtool check-config /etc/alertmanager/alertmanager.yml

# If validation passed, reload

curl -X POST http://localhost:9090/-/reload

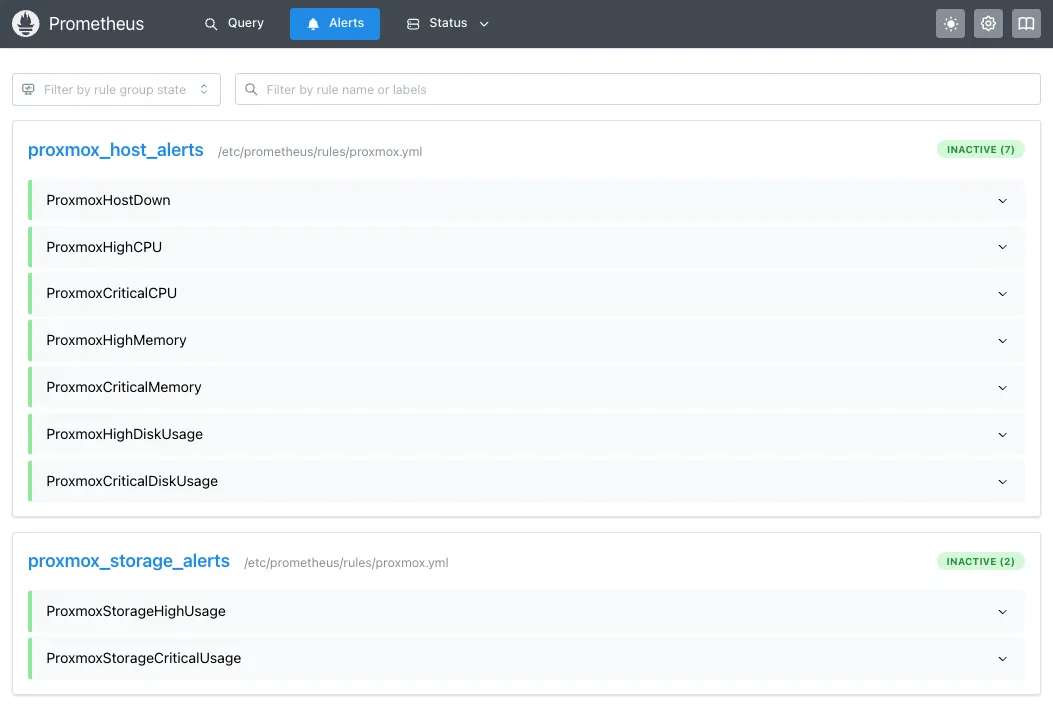

systemctl restart alertmanagerSanity check: Open http://192.168.100.40:9090/alerts in your browser. You should see all your alert rules listed (even if they’re not firing yet):

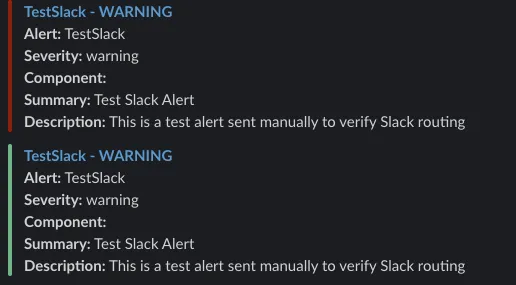

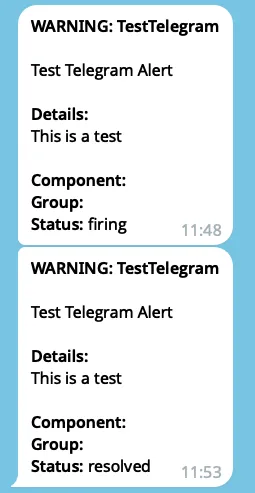

Step 9: Test Alerts

Don’t skip this step. You don’t want to find out your alerts don’t work when your node is actually on fire.

Fire off some test alerts to make sure routing works:

# Test Slack alert (if you set it up)

curl -X POST http://localhost:9093/api/v2/alerts \

-H "Content-Type: application/json" \

-d '[{

"labels": {

"alertname": "TestSlack",

"notification_channel": "slack",

"severity": "warning"

},

"annotations": {

"summary": "Test Slack Alert",

"description": "This is a test alert sent manually to verify Slack routing"

}

}]'

# Test Telegram alert

curl -X POST http://localhost:9093/api/v2/alerts \

-H "Content-Type: application/json" \

-d '[

{

"labels": {

"alertname": "TestTelegram",

"notification_channel": "telegram",

"severity": "warning"

},

"annotations": {

"summary": "Test Telegram Alert",

"description": "This is a test"

}

}

]'Check your phone/Slack. Within 10-30 seconds you should see messages:

Troubleshooting Common Issues

Here are the issues that drove me nuts when I first set this up. Save yourself some time.

PVE Exporter Won’t Start / Shows “Not Configured”

This one got me for like 20 minutes the first time. The service starts, but when you check status it says “not configured” or just dies.

What’s probably wrong:

Config file doesn’t exist or is in the wrong place

cat /etc/prometheus-pve-exporter/pve.yml # If you get "No such file", well... there's your problemAPI token format is wrong - The format is picky:

- Should be:

token_name: "grafana-token"andtoken_value: "8a7b6c5d-1234-5678..." - NOT the full

PVEAPIToken=user@pve!token=valuestring - If you copied the wrong thing, the exporter will silently fail

- Should be:

Wrong Proxmox IP or can’t reach it

curl -k https://192.168.100.4:8006/api2/json/nodes \ -H "Authorization: PVEAPIToken=grafana-user@pve!grafana-token=YOUR_TOKEN" # Should return JSON. If timeout/connection refused, check your network/firewallCheck the actual error in logs

journalctl -u prometheus-pve-exporter -f # Usually tells you exactly what's broken

Prometheus Target Shows “Context Deadline Exceeded”

Translation: Prometheus can’t scrape the exporter in time. Usually means network issues or SSL problems.

Quick fixes:

Firewall blocking port 8006 - Can the LXC reach Proxmox?

# From inside LXC curl -k https://192.168.100.4:8006 # If this times out, your firewall's blocking itSSL certificate problems - Self-signed cert on Proxmox? Set

verify_ssl: falsein/etc/prometheus-pve-exporter/pve.yml(already in our config)Scraping too slow - Increase timeout in

/etc/prometheus/prometheus.yml:scrape_configs: - job_name: 'proxmox' scrape_timeout: 30s # Bump from default 10s

Grafana Shows “No Data” on Dashboard

Dashboard imported fine, but all the panels are empty. Frustrating.

Debug steps:

Is Prometheus actually working?

- Grafana → Configuration → Data Sources → Prometheus

- Click Test - should say “Data source is working”

- If it fails, Prometheus isn’t running or wrong URL

Are metrics actually being collected?

- Open

http://192.168.100.40:9090(Prometheus UI) - Graph tab → query

pve_up - Should show

1if exporter is working - If nothing shows up, go back to fixing the exporter

- Open

Time range issue - Dashboard looking at last 6 hours, but you just started collecting data? Change time range to “Last 15 minutes” and see if data appears

Alerts Configured But Nothing Happens

You set up all the alerts, but your Telegram/Slack is crickets even when you know CPU is maxed.

What to check:

Are the alerts even firing in Prometheus?

- Open

http://192.168.100.40:9090/alerts - Green = OK (not firing)

- Yellow = Pending (condition met, waiting for “for” duration)

- Red = FIRING (should be sending to AlertManager)

- Open

Is AlertManager receiving them?

curl http://localhost:9093/api/v2/alerts # Should show active alerts if any are firingCheck AlertManager logs - routing might be broken:

journalctl -u alertmanager -f # Look for errors about failed receivers or routingDid you test manually? Go back to Step 9, fire a test alert. If that doesn’t work, your Telegram token or Slack webhook is wrong.

Permission Denied / 403 Errors from Proxmox API

The exporter’s hitting the API but getting rejected.

Usually one of these:

Wrong permissions on the user

# On Proxmox host pveum user permission list grafana-user@pve # Should show "PVEAuditor" role on path "/" # If not, go back to Step 4 and fix itToken got nuked somehow (happens after Proxmox updates sometimes)

# Recreate it pveum user token remove grafana-user@pve grafana-token pveum user token add grafana-user@pve grafana-token --privsep 0 # Update the token in pve.yml with the new valueToken expired - Tokens don’t expire by default, but check Proxmox UI (Datacenter → Permissions → API Tokens) just in case someone set an expiration

Monitoring Metrics

Key metrics available:

Host Metrics:

pve_cpu_usage_ratio- CPU usage (0-1)pve_memory_usage_bytes/pve_memory_size_bytes- Memory usagepve_disk_usage_bytes/pve_disk_size_bytes- Disk usagepve_up{id="node/pve"}- Host availability

Storage Metrics:

pve_disk_usage_bytes{id=~"storage/.*"}- Storage pool usagepve_storage_info- Storage pool information

Alert Rules Summary

| Alert | Threshold | Duration | Channel | Severity |

|---|---|---|---|---|

| Host Alerts (Telegram - Critical Infrastructure) | ||||

| ProxmoxHostDown | == 0 | 1 min | Telegram | Critical |

| ProxmoxHighCPU | >85% | 5 min | Telegram | Warning |

| ProxmoxCriticalCPU | >95% | 2 min | Telegram | Critical |

| ProxmoxHighMemory | >85% | 5 min | Telegram | Warning |

| ProxmoxCriticalMemory | >95% | 2 min | Telegram | Critical |

| ProxmoxHighDiskUsage | >80% | 10 min | Telegram | Warning |

| ProxmoxCriticalDiskUsage | >90% | 5 min | Telegram | Critical |

| Storage Alerts (Telegram - Operational) | ||||

| ProxmoxStorageHighUsage | >80% | 10 min | Telegram | Warning |

| ProxmoxStorageCriticalUsage | >90% | 5 min | Telegram | Critical |

The Bottom Line

If you followed along, you now have:

- Grafana dashboards showing exactly what’s happening on your Proxmox host

- Telegram alerts that’ll ping you before things explode (not after)

- Comprehensive monitoring without installing anything on the Proxmox host itself

All of this running in a single 8GB LXC container. No bloated VMs, no agents cluttering up your host, just clean API-based monitoring.

What you should do next:

- Tune those alert thresholds - 85% CPU might be fine for your workload, or way too high

- Add more storage pools if you have them (just copy the alert rules)

- Set up a reverse proxy with SSL if you’re exposing Grafana externally

- Maybe add VM/LXC monitoring later (different exporters, separate guide)

The real test: Will you actually notice when something breaks? Run those test alerts (Step 9) again in a week to make sure everything’s still working. AlertManager configs have a way of breaking silently.

And hey, when you do get woken up at 3am by a disk space alert, at least you’ll know before your backups fail and users start complaining. Ask me how I know that’s worth the setup time.

Resources

Share this post

Found this helpful? Share it with your network!